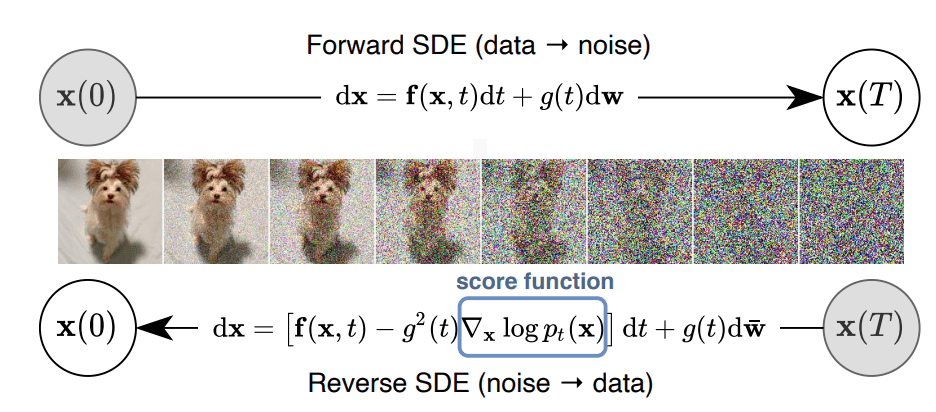

Diffusion models have recently emerged as the state of the art for generative modelling. Among them, two of the most popular implementations are Score matching with Langevin dynamics [Son19G] (SMLD) and de-noising diffusion probabilistic models [Ho20D] (DDPM). Both are based on the idea of generating data by first corrupting training samples with slowly increasing noise, and then training a model to revert the process. However, while SMLD focus on learning the score of the images, i.e. the gradient of the log probability density with respect to data, and then use Langevin dynamics to sample from a decreasing noise sequence, DDPM trains a sequence of probabilistic models to reverse each step and uses knowledge of the functional form of the reverse distributions to make training tractable.

Despite the slightly different formulations, both methods rely on the invertibility of diffusion processes and can be unified under the formalism of stochastic differential equations (SDEs). [Son21S] proposes such a unified framework. Leveraging the formalism of SDEs and adapting it to the modern deep generative models, the authors give the following theoretical and practical contributions:

flexible sampling: general purpose solvers of SDEs have been an active research field in mathematics over the past decades. Among the most promising for generative modelling are score-based MCMC methods and probability flow ODEs.

controllable generation: more flexible management of scores in the reverse-time SDE. This allows better class-conditional generation (e.g. asking to generate dogs or cats pictures instead of picking randomly from the two classes). For examples on how this can be implemented with DDPMs, see the recent paper [Nic22G] by OpenAI.

unified framework: the work unifies previous approaches under the same formalism and extends them to continuous time. This yields numerous mathematical benefits, e.g. the possibility to use classical sampling algorithms which have stability guarantees under broad assumptions, such as Euler-Maruyama and stochastic Runge-Kutta methods.

Drawing from the techniques of statistical mechanics, this work reaches a new state of the art in image generation while at the same time introducing the formalism of SDEs to the world of generative models. The mixing of these two disciplines represents an exciting and very promising new frontier for machine learning.