Anomaly detection in time series is a remarkably challenging task due to complex inter-dependencies between the variables. [Xu22A] (spotlight in ICLR2022) tries to exploit exactly these complex relationships to identify anomalies. The idea is simple: nominal points are likely to exhibit long range associations to other variables while anomalies should be erratic, and therefore we can only expect to find local associations at best.

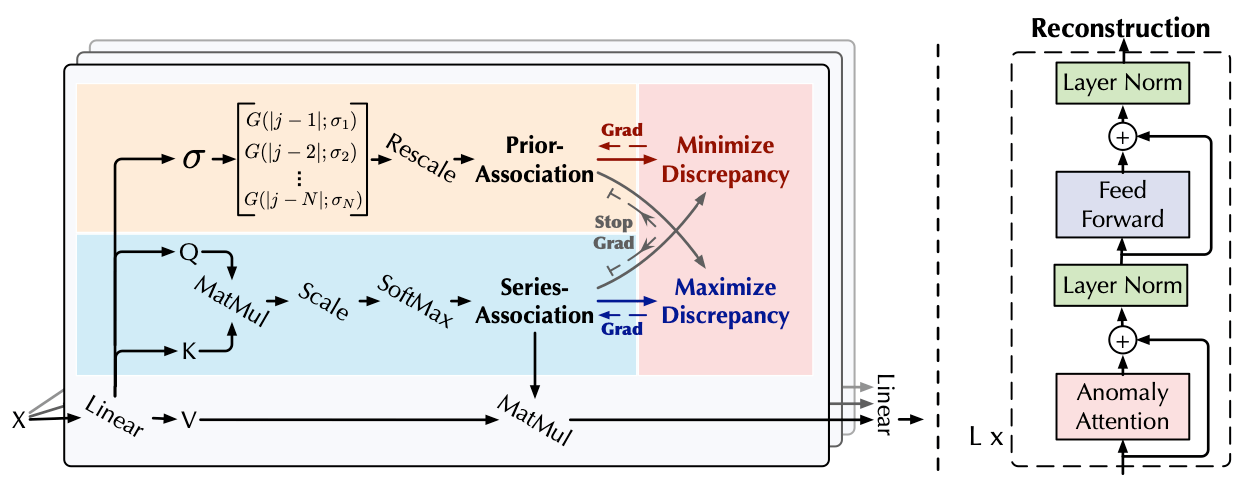

The authors revisit the transformer architecture to integrate this idea. Instead of computing only association weights in a transformer layer, they also compute what they call prior associations. These are computed using a Gaussian kernel and are therefore constrained to be local. Series associations are computed with the usual attention weights.

The system is trained in a minimax fashion. The base loss is always the reconstruction error but one adds a symmetrised KL-divergence term between the prior association and the series association (called association discrepancy). The discrepancy term changes the sign between the maximisation and the minimisation step. The system therefore tries to minimise or maximise the discrepancy of the two association weights in an alternating fashion. In the maximisation step the weights of the series association are frozen, whereas in the minimisation step those of the prior association are.

This training mechanism forces series associations to be long ranged and complex. Nominal points should be relatively well reconstructed even with a large discrepancy. Anomalies, however, should not be well reconstructed if the discrepancy is large. The anomaly score is then simply the point-wise product of the softmax of the association discrepancy and the reconstruction error.

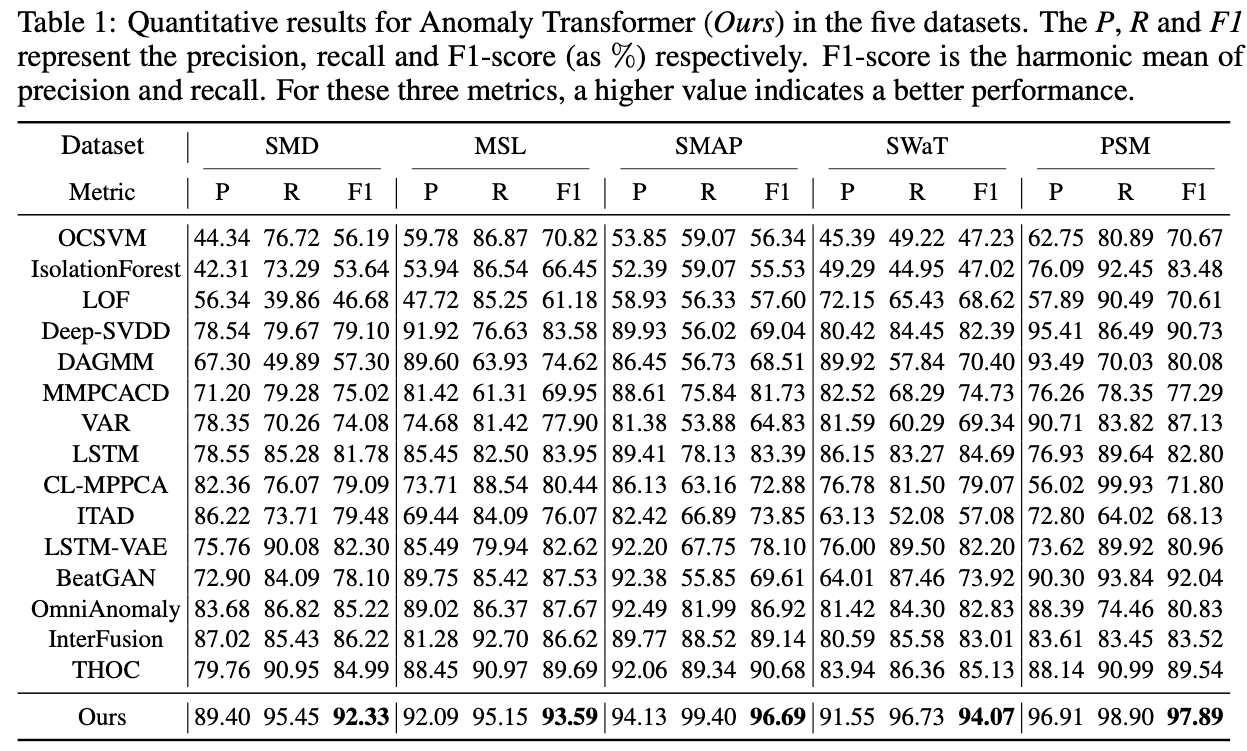

The authors evaluate their approach on 6 anomaly detection tasks, out of which 3 are synthetic to better study the behavior in isolation and 3 are real world examples. They compare against 18 other approaches that range from classical to deep learning methods. The anomaly transformer shows excellent results throughout the experiments.

In my opinion the association-based criterion is a very interesting new approach to anomaly detection. Also, the minimax approach for training the transformer is an innovation worth mentioning.